Demosaicing

I spent a good bit of 2014 working on demosaicing of images from an unusual image sensor.

Little particles of energy bounce around the world. Our eyes are great energy detectors. We see higher energy particles as more blue. We see lower energy particles as more red. The middling energy particles we see as green-ish. There's a spectrum, blue into green, green into red via yellow and orange. We call that the rainbow. Color is how our eyes describe the varying powers of energy.

Our eyes, it turns out, cheat a bit when detecting this spectrum of energy. There are these cells, called cone cells, towards the back of our eyes. There are three types. One is good at detecting higher energy particles, what we call blue. One detects mid-energy particles, which we call green. And, of course, one can detect lower energy particles, which we call red. The ranges of these detectors overlap enough that if lower-mid energy particles comes in, they will trigger the red-detecting and green-detecting cone cells, and we'll hazard the guess that we're seeing yellow.

This works pretty well. Or at least, it works well enough that our cameras don't need to work better. Hence, cameras have sensors to detect three distinct colors—blue, green, and red. And that is enough to record a color image which will read as realistic to the human eye.

There's some catches to this. For one, it's really hard to simultaneously detect how blue, green, and red light is at the same spot. So standard practice is to put color sensors next to each other, to form a mosaic of those three colors. This is practical for sensor construction, but makes it tricky to reconstruct an image.

When computers manipulate images, it is easiest for them to think of the image as divided up into spots on a grid. Each spot (known as a pixel) has a particular color. Often, that pixel contains a description of how red, green, and blue that spot is. The mosaic system of detecting color doesn't produce images with such full information. It produces pixels with one color component (say, blue) known, but the other two (say, red and green) unknown. Essentially, at any given point, an image sensor will miss two thirds of the information.

The process of producing data which is more vernacular to a computer—one with red, green, and blue components for each pixel—is called demosaicing. The standard way to do this is to make a decent guess. Consider a pixel with a known green intensity, but no known blue or red intensity. The computer can look to neighboring pixels with known blue intensities to interpolate the unknown blue intensity. And similarly, by looking at neighboring red pixels, the computer can do a decent job of guessing the unknown red intensity.

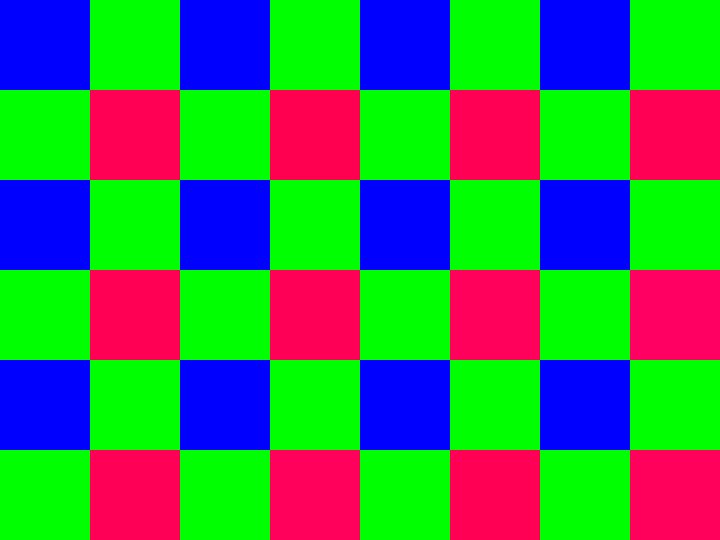

The standard mosaic of color-detectors for digital cameras is called the Bayer filter. Bryce Bayer, a Kodak engineering, patented this arrangement back in 1976. Bayer described a regular pattern, a 2x2 grid. It's clever in its simplicity.

In Bayer's checkerboard, every other square is green-sensitive. The remainder of the squares alternate in strips of either red or blue-sensitive pixels. Our eyes are most attuned to detecting mid-energy light, which we see as green. In fact, the dominant energy-detectors in our eyes are luminosity-sensitive rod cells, which are attuned to mid-energy, and hence green-ish, light. It makes sense to have so many green-sensitive squares on this checkerboard, as these squares give a good sense of how we see the lightness or darkness of the image. The intervening red and blue squares then detect color—how much the image has strayed from middle green—at a lower resolution.

The Bayer sensor pattern is great. Decades of work has gone into figuring out clever ways to demosaic images recorded by it. It can be processed rapidly by naive techniques, and clever techniques have been invented which work quite well and are reasonably fast.

Of course, other sensor patterns have come along. In 2013 I started using a digital camera built with an exotic 6x6 matrix, known as the X-Trans filter. There hasn't been that much research into processing this pattern. The great open source photographic tool darktable did not have code to handle the X-Trans mosaic. Fortunately, Dave Coffin's tool dcraw did have a few methods to handle X-Trans patterns. The best one is credited to a mysterious coder named Frank Markesteijn. I realized that it would be possible to adapt Markesteijn's code into darktable.

Pulling Markesteijn's code in and making it work well took a bit of time. And other components of darktable work on mosaiced images and expected a Bayer pattern. I wrote versions of the most important of these to work with the X-Trans pattern.

A tremendously talented programmer named Ingo Weyrich adapted the new darktable X-Trans code into another tool, RawTherapee, and in the process created some great changes which made the code far faster while using less memory. I was able to bring many of those improvements back into darktable. Users of darktable were generous with testing the code and contributing images to diagnose its failures. The rawspeed library added the fundamental code which reads images from X-Trans cameras. The darktable developers worked to make sure that the code enough was good enough for inclusion, and indeed darktable 1.6, released in December 2014, has initial support for X-Trans sensors.

Working on demosaicing gives a fundamental understanding of how digital cameras record the world, how software processes these recordings, and how our eyes perceive—whether landscapes and people or digital images of such.

I gave a few talks about this work. A talk at the Sunview Luncheonette on Slumgullion touched on the phenomenology of demosaicing. Other venues at which I described this work include the Camera Club of NY, and M+B in L.A. (during a program of talks organized by Matthew Porter).